Data Analysis and the Examination and Study Phase

Evaluation and Study

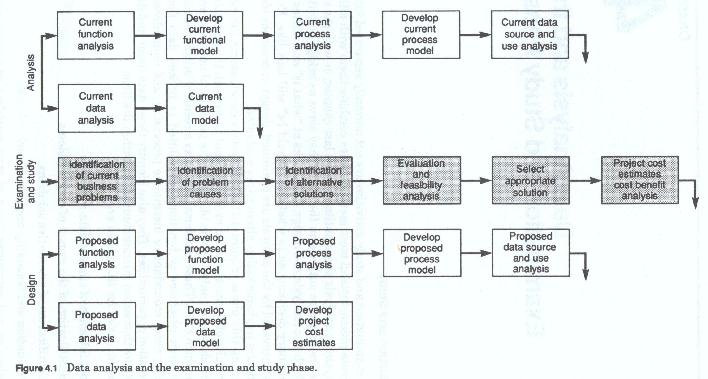

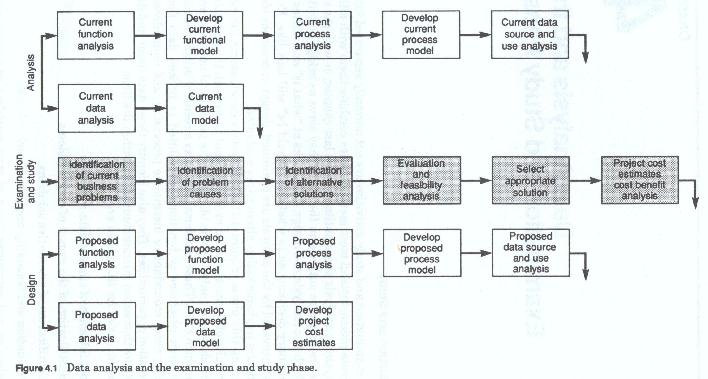

The examination and study activities are not normally separated into a separate phase, instead most methodologies combine them with either the systems analysis phase outlined the the last chapter, or the systems design phase outlined in the next. They serve as bridge activities in which the analysis and design team looks at "what is" and makes its preliminary decisions as to what the "should be" will look like. Problems uncovered by the analysis are examined, design approaches identified and implementation alternatives evaluated.

During this phase (figure 4-1) of the system development life cycle the results of the analysis are examined, and using both the narratives and models, the analysis team identifies the procedural and data problems which exist. These problems may be as a result of misplaced functions, split processes or functions, convoluted, or broken, data flows, missing data, redundant or incomplete processing, and non-addressed automation opportunities.

They also identify those data items and data problems that cause miscommunication within the firm.

The activities for this phase are:

Each activity examines both data and process, since for the most part they are interwoven. Problems with data and problems with process are inseparable and in most cases one cannot change one without the changing the other.

Data components are evaluated to determine whether the data gathered by the firm is:

a. needed

b. verified or validated in the appropriate manner

c. useful in the form acquired

d. acquired at the appropriate point, by the appropriate functional unit

e. complete, accurate and reliable

f. made available to all functional areas which need it

g. saved for an appropriate length of time

h. modified by the appropriate unit in a correct and timely manner

i. discarded when it is no longer of use to the firm

j. correctly and appropriately identified when it is used by the firm

k. appropriately documented as to the type and mode of transformation when it does not appear in its original form

l. appropriately categorized as to its sensitivity and criticality to the firm.

Most importantly data items are evaluated to determine whether they are defined properly and consistently, if at all.

The evaluation of the data components seeks to achieve an end-to-end test of the analysis products, looking for inconsistent references, missing data or missing processes, missing documents or transactions, overlooked activities, functions, inputs or outputs, etc.

In many respects this evaluation should be conducted in conjunction with the other evaluation processes. The analyst and the user alike should be looking for "holes" in the analysis documentation, both to determine "correctness" and completeness.

Identification of current data problems

Just about every business system has problems with respect to data. The differences between newer systems and older systems are reflected in the types of problems and the number of problems.

Older systems have problems with inaccurately defined data, missing data and data which has become to inflexible to support current needs. This is typified by forms containing fields which are used by different areas for different reasons, multiple copy forms where data on the various copies changes independently of each other, and forms which have been supplemented by addendum, and continuation forms used to contain information not provided for on the original version.

In some cases older coding structures have become vague or worse have codes which have changed their meaning over time without corresponding changes in procedural documentation.

Newer, usually automated systems, employ codes whose meaning is embedded in program code, and procedures which have become so complex that only the programmers are fully versed in them, and thus the operational and managerial personnel are forced to rely on the data processing staff to resolve problems whose solution rightly belongs elsewhere.

The newer systems in many cases have had "intelligence" built into identifiers which have become inadequate for current business needs. Account based firms (banks, etc.) have long constructed account identifiers from a combination of office number and some qualifier (usually a sequential number or a series of codes which identify not only the unique account, but also the type of account, account responsibility, etc. Over time these codes have lost their meaning due to account movement, expansion of code ranges beyond the limits of the valid values (e.g. single digit numeric codes with more than ten possible values), or changes in code meaning which affect some accounts and not others.

Perhaps the most prevalent type of data problem however is that of missing or inadequate data. This happens in just about every firm. Original assumptions as to the kinds of data which must be collected and maintained have been invalidated due to changes in business conditions or business requirements. This is most readily evident with respect to customer demographic information which is used for marketing analysis, and customer service needs.

Perhaps the second most prevalent problem is data which has been collected, and filed by the firm, but never maintained (kept up-to-date). This out of date data is usually kept in paper files for reference although in many instances it may be found in automated files as well. Aside from being largely inaccurate, this data is also expensive to store, and dangerous if it is used for current processing or analysis. Much of this data is maintained on original employment applications, account opening forms, and other onetime use forms which are filled out and filed away. Other file problems arise from different kinds of data being stored in the same files and in some instances the same records making data maintenance inconsistent at best and impossible at worst. Examples of this are found in payroll systems which also carry employee data, account files which also contain customer data, policy files which contain customer data, and order files which also contain product data. Still other types of problems come from files where different data in the same records has different currency, that is different parts of the records reflect different instances in time, and file containing the same data reflect different instances in time, making data reconciliation and amalgamation difficult if it can be done at all.

Some current data problems are related to growth in both the size of the files themselves and in the number of operational personnel who need access to the same data. Increases in transaction volumes changes in the complexity of those transactions and the slow evolution of business systems due to continual minor (and sometimes major) modification all cause the performance of older systems (their ability to support current needs) to degrade. Operational personnel who were originally satisfied with same day response to queries now demand multi-second response. Managerial personnel who were originally satisfied printed reports now require personal access to the data files for analysis.

The evolution of personal computing and the concurrent migration away from centralized data center support has cause heightened user awareness of both data and processing, and has increased the demand for data in ever increasing quantities and levels of detail. Firms which used to file data on microfiche or tape after relatively short periods of time beyond its use in current business processing, are now being asked to keep increasing amounts of this data available for longer periods of time for immediate access by business personnel.

Technology has also played a part in causing real or perceived data problems in many firms. Newer, more flexible, more friendly Data Base Management Systems and the increased use of non-procedural languages and more friendly data manipulation technique (fourth generation languages) coupled with the increased computer literacy fostered and supported by desktop microcomputers of all types have caused new interest in data and its availability by business personnel. This proliferation of small, powerful processors with disproportionately large capacities for data storage and the evolution of newer methods of automated communication between processors of all sizes, regardless of location, and the ease of data transfer between these processors has weakened corporate control over its data and in many cases reduced the reliability of data which reaches management's attention.

Microcomputer based Data Base Management Systems which allow the user to collect new data, retrieve data from other processors and mix this data together for analysis, along with new tools for data analysis and manipulation all heighten corporate conflict over data. Microcomputer systems, departmental systems and centralized mainframe systems, along with manual (paper based) systems barely coexist and have created a quagmire of data problems, many of which were unforeseeable when the only data processing was performed on mainframes.

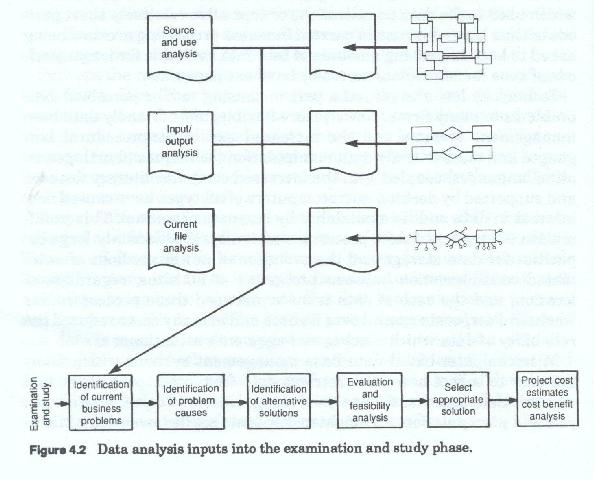

Causes of data problems

Although each analysis team must identify the specific set of problems and the specific set of causes (figure 4-2) for their environment, generally data problems are caused by one or more of the following:

Carrier to Data Evaluation

Carrier to data evaluation focuses in on the data transactions to determine whether the data on the documents (data carriers) which enter the firm are passed consistently and accurately to all areas where it is needed.

It seeks to determine whether or not the firm is receiving the correct data, whether it understands the data that it is receiving (i.e. what the transmitter of that data intended), and whether it is using that data in a manner which is consistent with its origin.

Since, with few exceptions, the firm collects data on its own forms, and with even fewer exceptions, the firm can specify what data it needs and in what form it needs that data, this level of evaluation, should compare the firm's use of the incoming data with the data received to ensure that the forms, instructions, and procedures for collection or acquisition, and dissemination are consistent with the data's subsequent usage.

Zero-Based Evaluation

This evaluation approach is similar in many respects to Zero-Based Budgeting, in that it assumes nothing is known about the existing data. It is a "Start from Scratch" approach. All user forms, data and files are re-examined and re-justified. The reasons for each is documented and all data flows are retraced.

Zero based evaluation should always be undertaken where the analysis indicates that the systems, processes, activities and procedures are undergoing re-systemization or re-automation. It should also be undertaken where the analysis indicates that the existing systems and automation may have been erroneous, or the business requirements may have changed sufficiently to warrant this start from scratch approach.

In any case, the analyst should never assume that the original reasons for data being collected or being processed are still valid. Each data transaction, and each processes must be examined as if it were being proposed for the first time, or for a new system. The analyst must ask:

Problem identification

Although many of the problems especially data problems, with the existing system may have been known or suspected at the time of project initiation, the scope and extent of those problems, and in many cases the reasons for those problems, many not have been accurately known.

The analytical results not only represent the analysts understanding of current environment, and the analysis team's diagnosis of the problems inherent in that environment, but also their understanding of the users unfulfilled current requirements and projections of user needs for the foreseeable future. The combination of these four aspects of the analysis will be used to devise a design for future implementation. Thus it is imperative that it be as correct and as complete as possible.

It is at this point that the analyst and user, should have the detailed analytical documentation before them, as well as, the results of the evaluation of that analysis.

During the interviews conducted during the data analysis activities of the systems analysis phase, each user should have been asked questions which sought to identify one or more of the following data problems:

Users were asked to identify areas where responsibilities for activities were either not specified, or where responsibility for certain activities were accompanied by the appropriate authorities. Activities where responsibilities were split - where responsibility was shared across multiple functions - were identified.

Identical or highly similar activities which were performed in multiple areas were identified. Activities which appeared to be aimed at accomplishing the same purpose but which were performed in highly dissimilar manners were identified, and the causes for those dissimilarities were identified.

During the interviews, the users were also asked to enumerate processes and activities which were not currently being performed, and which could not be performed or could only be performed with great difficulty in the current environment, but which the users felt should be performed, especially if that difficulty had to do with missing, incorrect or unaccessible data.

As the analysis progressed, the analysts should have been able to identify areas of opportunity. Areas of opportunity result from the synergy between data and process, data and data, process and data. Synergy which has created the ability to achieve a corporate mission or goal, or to provide a new service, or create a new product, or provide new information about the firm's products, competitors, customers or markets, but where that opportunity has not as yet been identified or taken advantage of.

System Design Requirements Document

The product of the Examination and Study phase is document which is usually referred to as a System Design Requirements Document, or a Client Requirements Document. This document classifies, catalogues, and enumerates all existing and identified problems and opportunities, by function, processes, activity, task and procedure. For each of these it lists the change requirements or specifications - the specifications and requirements for correcting the problems or the possible methods of taking advantage of the identified opportunities.

Simply stated, this is the verified documented list of all of places and ways where system and procedural changes have fallen behind the business activities which they were intended to support. It also documents all of the ways where the users anticipate changes to occur, or where changes which have occurred have created opportunities to take advantage of recognized synergies within and between business activities and among the resources of the business.

Prioritizing of changes

Each item pair - problem and requirement or specification, or opportunity and proposed method or approach - is evaluated against all other pairs and prioritized. The prioritization may be performed by any one of a number of available methods such as:

The above methods (and variations thereof) are the most prevalent ones and while they accomplish part of the goal of analysis, and are necessary there are two other rankings which should be performed by both the user and the analysis and design team. These are dependency ranking and synergy or impact/benefit ranking.

Dependency ranking

Dependency ranking seeks to determine item dependencies, items which form the foundation for other items, or which items must be accomplished before certain others. This assumes that there are three types of changes which must be made during any new system design

Foundation changes - changes upon which all others are based. These include changes in charter, responsibility assignment or technology; Development of a new product or service, new technology; Selection of a new market; Finalization of corporate reorganization, Movement to new location, or modernization of existing location, etc.

Architectural and procedural changes - changes which once the foundation items are determined can be designed and developed. These are basic structural changes, and form the bulk of the difficult and usually time consuming system design tasks. These include changes in data gathering, validation, verification, storage and evaluation procedures. The also include all of the activities which aggregate and regroup those procedures to activities, the activities to processes, and the processes to systems

Presentation, Display or Cosmetic changes - many changes which have been identified result from changes in user perception and perspective, or from increased user awareness of his environment and needs. These result in requests for changes in report form and content, faster access to existing data, or improved methods of accomplishing existing tasks. These changes usually do not require structural or architectural changes. Each of these changes while small in itself, when added to the vast number of other similar changes may require the largest part of the design team energy.

Synergy or impact/benefit ranking

Synergy or impact/benefit ranking is the most difficult task to perform in this phase but it is also the one where major benefits to the firm exist. Although it is termed a ranking, in reality it is the examination of each item or combination of items (the items in this case being proposed changes or modifications) to assess;

Cost benefit ranking

The Examination and Study phase usually includes a cost benefit analysis of each proposed change, and an evaluation of the costs and benefits of each change with respect to its various rankings and prioritizations. The item by item cost benefit analysis provides another (and sometimes the most definitive) method of evaluating the proposed change specifications and requirements.

Cost Benefit Analysis

The Examination and study phase is completed when a cost benefit analysis is developed for the system design phase for the selected approach.

From the data perspective each item of data that is collected, and each procedure put in place to collect, maintain or present data has a cost. Since each data item must have all three types of procedures to be useful, the costs of data, at a minimum is the cost of the procedures and the effort necessary to perform those procedures. There is additional cost associated with storage and media. These costs must be assessed during the cost benefit analysis.

There are additional costs which must also be ascertained, most of which accrue from the costs of new technology which are usually associated with significant upgrades in data capability. These costs include:

Any costs associated with new hardware to house and support the new products

The benefits are achieved from reductions of those costs through more efficient processing and higher data availability.

In some cases the benefits may be achieved through actual increases in revenue, or faster collections, or more rapid turnover, or increased productivity. Some examples of benefits to be looked for, and estimated, in this area are:

Indirect benefits - are those which can't be quantified, or assigned a monetary value, but which nonetheless result in desirable outcomes from the project. These might include:

A cost benefit analysis may be as short as a single page or may cover many pages. In format it is similar in style to a standard budget, with costs being equated to the expense side, and benefits being equated to the income side. All cost items should be subtotaled by category and an overall total cost figure computed. All benefits should be subtotaled by category and a total benefit figure computed. In more complete cost benefit analyses, both the costs and the benefits would be "spread" across the project or phase time frame, with the costs and projected benefits.

The data requirements of the operational level units, while extensive, rarely change since they are contingent upon fixed sources of input.

However, when the data requirements do change, the result can sometimes be chaotic for it usually means some basic aspect of the business has also changed.

Data Analysis, Data Modeling and Classification

Written by Martin E. Modell

Copyright © 2007 Martin E. Modell

All rights reserved. Printed in the United States of America. Except as permitted under United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a data base or retrieval system, without the prior written permission of the author.